Lex's Fortnightly Creative AI Experiments: Number 1

Every two weeks, I will aim to build a prototype or try out something new utilising generative AI plus share new projects/talks that I work on utilising Generative AI.

Echelons of Dreamscapes

I officially became part of the Creative Partners Program by Runway which provides a select group of artists and creators with exclusive access to new Runway tools and models.

So I got a complimentary Unlimited plan, 1 million credits + opportunities for collaboration (I will be sharing more on a WIP progress collaboration with Runway in future newsletters).

To celebrate this, I made a film with the help of friends Lilian and Tom for the ‘Art in Architecture exhibition’ by POC in Architecture.

Echelons of Dreamscapes is an experimental film that delves into the subconscious terrain of an urban dreamer's mind. Using London's ever-changing cityscape as a backdrop, the film reimagines the everyday into a surreal narrative.

While generative AI tools allow me to bring something to life faster than ever before. Craft and skill is still very much needed to bring a great piece of work to life.

I recorded my process below which included:

Creating a narrative of the film

Mapping out what each scene would be (there is 32 in total!)

Creating each scene in Midjourney, hoping that you can get close enough to a vision that you have in your head.

Utilising Runway’s Gen 2 model with the images created in Midjourney to generate 32 video clips which are 4 seconds each - while hoping that you can get close enough to a vision that you have in your head.

Using Topaz Video AI to enhance the video quality.

Writing a script that would work with film.

Getting Lilian to record herself speaking with the script and scenes over google meet so I can send over the clips, script and references to Tom so he could edit and do the voice over.

Note - I had to crunch a lot of footage into a 2 minute clip, it is super fast.

Make Real by tldraw

This week on Twitter/X, I came across

, founder of tldraw - a white-boarding application and an engine for applications that render React components onto a canvas interface.He shared a prototype which allows someone to simply sketch an interface on the tldraw canvas, press a button, and get a working website in seconds.

The prototype is powered by OpenAI’s GPT-4V, a large language model which is capable of accepting images as input. I have been exploring image classification and other computer vision model since the days of ImageNet but I am very excited to try out GPT-4V in future experiments.

The tldraw team released a starter template kit that I cloned to explore what I can do with tldraw and GPT-4V.

I wanted to see if I could develop a UI prototype for the Dear Menopause project that COMUZI has been working on with Impact on Urban Health.

The video below shows me attempting just that:

sketching an interface of the home screen & giving it some annotated instructions

giving GPT-4V instructions to utilise OpenAI’s chat API with a now deleted key

a ui prototype of the dear menopause conversational engine

I will be exploring more make-real experiments over the next couple weeks too.

I wouldn’t be surprised if we see these type of ‘draw a UI’ features in your favourite design software sometime soon x

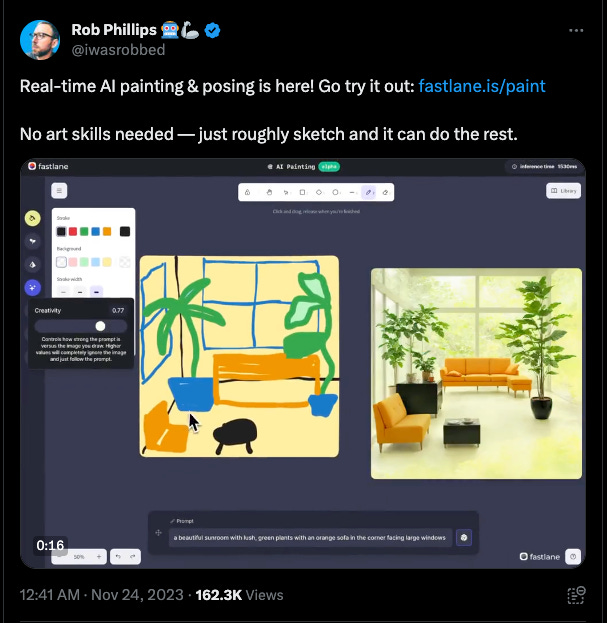

Real time painting with AI

Last thing for the week, also another prototype by tldraw + fal.ai that demonstrates real-time AI image generation based on drawings in a canvas.

I had to try it out for myself. Loved playing with it, as an exploration of an interface that allow us to go beyond the prompt text box. However, while you could draw/paint - you are still not in control of the final output. So you must be ok with the randomness.

I attempted to try out the alpha version for Fastlane’s AI painting tool but I am not sure that I got it working well for me haha.

Not experiments but still worth sharing :)

In October, I sat on two panels at:

the Design for Planet Festival by the Design Council on how collaborations between designers and AI can both solve technical problems and imagine creative futures.

the Science Gallery London on how do we design better AI that doesn't create or perpetuate health disparities?

Who am I?

I am Lex Fefegha, a creative technologist, designer and artist. I co-founded COMUZI with two of my best mates Rich and Akil ten years ago. Formerly associate lecturer of Computational Futures & AI at University of Arts London’s Creative Computing Institute.

Hit me up if you want to talk about exploring generative AI capabilities for your organisation! Find out more at https://genai.comuzi.xyz/